Abstract

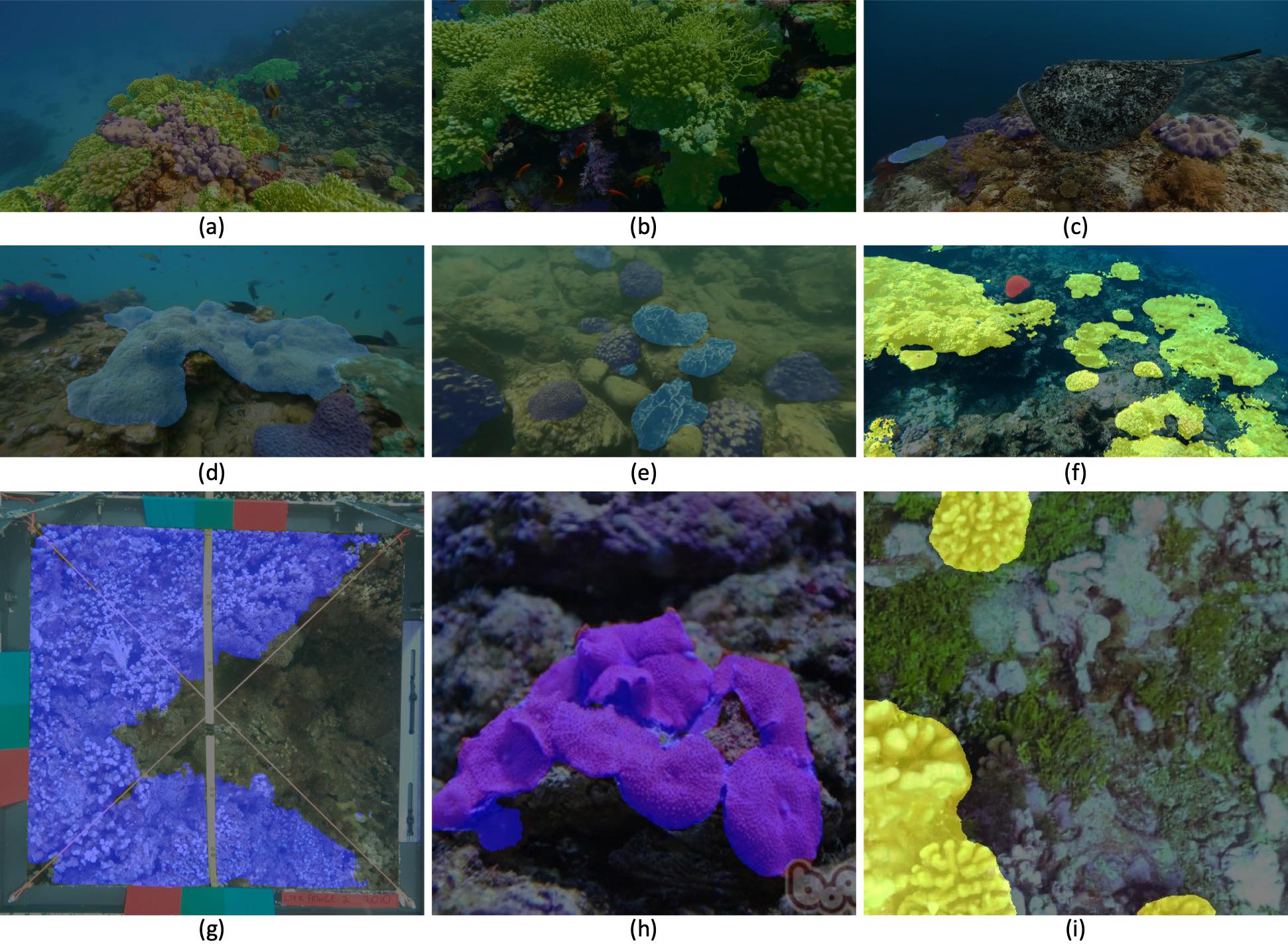

Underwater coral reef monitoring plays an important role in the maintenance and protection of the underwater ecosystem. Extracting information from the collected coral reef images and videos based on computer vision techniques has recently gained increasing attention. Semantic segmentation, which assigns semantic category information to each pixel in images, has been introduced to understand the coral reefs. Satisfactory semantic segmentation performance has been achieved based on large-scale in-air datasets with densely labeled annotations. However, the underwater coral reef understanding is less explored and the existing underwater coral reef datasets are mainly captured under ideal and normal conditions and lack variance. They cannot fully reflect the diversity and properties of coral reefs. Thus, the trained coral reef segmentation model shows a very limited performance when deployed in practical, challenging, and adverse conditions. To address these issues, in this paper, we propose an \textbf{in-the-wild} coral reef dataset named HKCoral to close the gap for performing in-situ coral reef monitoring. The collected dataset with dense pixel-wise annotations possesses larger diversity, appearance, viewpoint, and visibility variations. Besides, we adopt the fundamental coral growth form as the foundation of our semantic coral reef segmentation, which enables a strong generalization ability to unseen coral reef images from different sites. We benchmark the coral reef segmentation performance of 17 state-of-the-art semantic segmentation algorithms (including the recent generalist Segment Anything model) and further introduce a complementary architecture to better utilize the underwater image enhancement for improving the segmentation performance of models. We have conducted extensive experiments based on various up-to-date segmentation models on our benchmark and the experimental results demonstrate that there is still ample room to improve the coral segmentation performance. The ablation studies and discussions are also included. The proposed benchmark could significantly enhance the efficiency and accuracy of real-world underwater coral reef surveying.

Citation

@inproceedings{ziqiang2024hkcoral,

title={HKCoral: Benchmark for Dense Coral Growth Form Segmentation in the Wild},

author={Ziqiang Zheng, Haixin Liang, Fong Hei Wut, Yue Him Wong, Apple Pui Yi CHUI, Sai-Kit Yeung},

booktitle={IEEE Journal of Oceanic Engineering (JOE)},

year={2024},

}